Converting document formats with Pandoc

When I set out to convert my blog from the nearly 10 year old home-brewed ASP.NET based system to the spanking new Ghost based blog engine, one of the somewhat trickier problems I encountered was converting all my existing 70 odd posts from HTML into Markdown syntax. It was tricky because my HTML had, well, all kinds of code in it - arbitrary class names, IDs and such. Scrubbing all of it and producing something usable from it was a task that I wasn't exactly looking forward to with great delight. As it turned out, I ended up discovering this absolutely awesome tool called Pandoc which was just the thing I needed. Pandoc is a "universal document converter" and here's how the author John McFarlane, a professor of philosophy at the University of California chooses to describe it:

If you need to convert files from one markup format into another, Pandoc is your swiss-army knife.

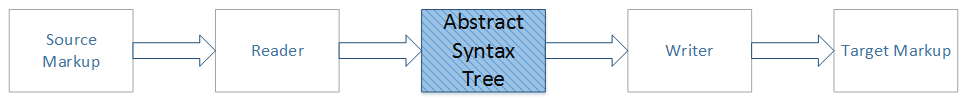

Pandoc works by connecting readers and writers via an intermediate JSON based abstract syntax tree (AST) representation of the document.

This allows one to independently code up readers and writers targeting just the AST and you are automatically able to convert between pretty much all supported formats. A comprehensive library of readers and writers is supported by the tool out-of-the-box including, happily for me, a reader that can parse HTML and a writer that can produce markdown. You convert a HTML document into markdown by running the tool from the command line like so:

pandoc -f html input.html -t markdown_strict -o output.md

Here, -f indicates that the source format is HTML, -t indicates that the target format is markdown and -o signifies the name of the the output file. If you don't supply a file name via the -f and -o options then the tool will simply read from and write to standard input and output respectively.

Manipulating the AST

The real power of the tool, in my opinion, lies in the fact that you can have the tool output the AST representation of the source document as JSON which you can then programmatically manipulate any way you like. Here's how you generate the JSON for a given HTML document:

pandoc -f html input.html -t json -o output.json

Pandoc also supports reading from and writing to standard input and output streams which when combined with some input/output redirection you can build some really nifty document processing pipelines. In my case, the markdown produced by Pandoc for some of my posts had some problems. For example, in some cases the markdown output would include markup like this - **** - which basically represents the presence of empty <b></b> or <strong></strong> tags in the source HTML. I wrote up a little node.js app to fix up this issue. I had the node app read JSON from the standard input stream and output the processed JSON to standard output. Once I had it working the way I wanted, I was able to put together a command such as the following:

pandoc -f html input.html -t json | node app.js | pandoc --no-wrap -f json -t markdown_strict -o output.md

The first part of the command converts the input HTML document into an AST representation which it then writes out to standard output as JSON. The JSON output is piped to the node.js app which loads it up into memory, walks the tree, fixes up document nodes that contain empty Strong elements by removing them and then outputs the modified AST as JSON to standard output. The modified AST JSON is then piped back to pandoc which proceeds to convert it to markdown. Easy-peasy!

Pandoc filters

The node.js app that removed the redundant Strong elements is an example of a filter app. The source for the filters I used to scrub the posts from my blog is available on Github. First I implemented the filters I needed as an array of functions in filters.js. Here for example is the filter that removes empty Strong elements:

{

name: "Remove empty bold/strong tags",

nodeType: "Strong",

apply: function(content) {

// if content's c array is empty then remove the

// node

if(content &&

content["c"] &&

Util.isArray(content.c) &&

content.c.length === 0 ) {

return null;

}

return content;

}

}

In app.js I first load up the JSON from standard input like so:

function loadJsonAsync() {

var deferred = Q.defer();

var json = "";

process.stdin.setEncoding("utf8");

process.stdin.on("readable", function() {

var chunk = process.stdin.read();

if(chunk !== null) {

json += chunk;

}

});

process.stdin.on("end", function() {

deferred.resolve(json);

});

return deferred.promise;

}

The filters are initialized via require like this:

var Filters = require("./filters.js").Filters;

And then the app proceeds to process the JSON like so:

// load up the json from stdin

loadJsonAsync().done(function(json) {

var ast = JSON.parse(json);

// apply all of our filters

Filters.forEach(function(filter) {

ast[1] = walk(ast[1], filter);

});

console.log(JSON.stringify(ast));

});

walk is a helper function I wrote to recursively visit each node in the syntax tree and apply the supplied filter on it. Here's the full function implementation:

function walk(content, filter) {

if(typeof(content) !== "object") {

return content;

}

if(Util.isArray(content)) {

return content.map(function(item) {

return walk(item, filter);

}).filter(function(item) {

return (item !== null);

});

} else {

if(filter.nodeType === "*" ||

filter.nodeType === content.t) {

// If a filter's `apply` method returns null then, it is

// removed from the AST. If `apply` returns an object

// then the node is replaced with what's returned from

// the filter. If `apply` returns an array then the

// array is spliced in.

var node = filter.apply(content);

if(node) {

// splice array if its an array

if(Util.isArray(node)) {

throw new Error("Not implemented yet.");

} else {

content.t = node.t;

content.c = node.c;

}

} else {

return null;

}

}

if(content.c) {

return {

t: content.t,

c: walk(content.c, filter)

};

}

}

// I don't think we should ever get here.

return content;

}

And that's about it. I wrote a little driver program to iterate through all my 70 odd posts and then run them through my pandoc based document processing pipeline to be left with pristine markdown that I can then take and load up into Ghost! There were still a few manual tweaks required for some of the posts but those were few and far between and definitely doable by hand. Yay pandoc!